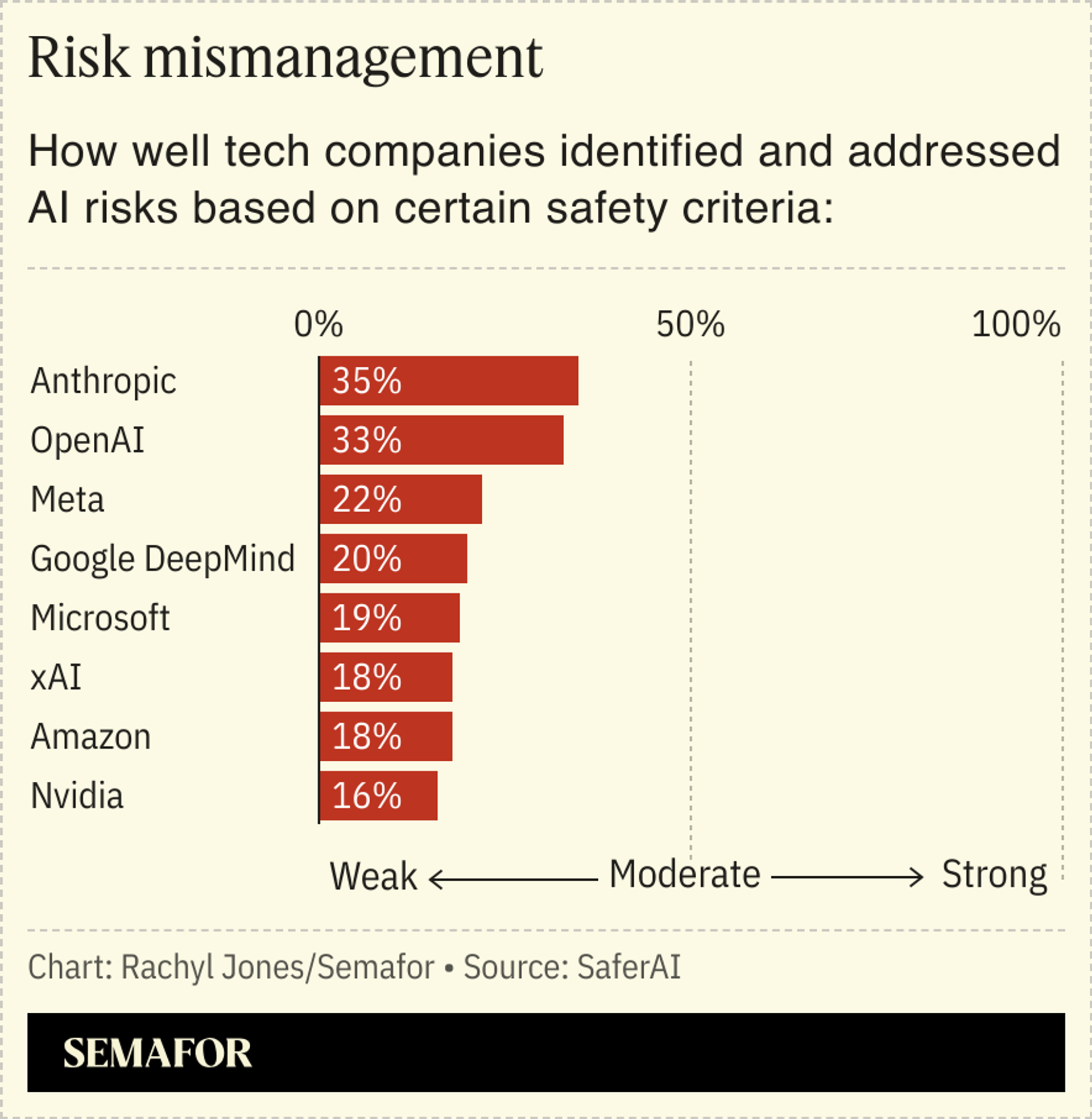

AI companies are nowhere near having strong risk management practices — even the ones touting AI safety like Anthropic, according to a new report released Thursday by risk management nonprofit SaferAI.

The France-based nonprofit reviewed the companies’ frontier safety frameworks — documents outlining risk management protocols — published this year. It evaluated 65 safety criteria on a performance scale where 0% represents that principle not being mentioned in the framework and 100% means mitigation measures were implemented in an exemplary fashion. Criteria include whether the companies test with third parties or disclose the frequency at which they evaluate their models.

“Initially, [the companies] weren’t even writing down their plans,” SaferAI CEO Siméon Campos told Semafor. “It was a big step for many of these companies to write their plans, but many are still far from what experts consider to be adequate.”

SaferAI ran the same analysis in October 2024 based on previous frameworks. Since then, Meta gained some serious ground and leapfrogged Google DeepMind, a company that had twice Meta’s safety score during the last round. In a statement to TIME, DeepMind said, “We are committed to developing AI safely and securely to benefit society.” It added that the report doesn’t take into account all of DeepMind’s safety efforts.

The SaferAI report aligns with a similar analysis released on Thursday by the Future of Life Institute, a California-based nonprofit working to mitigate the dangers of AI. It also found that major AI companies did a poor or only adequate job in managing safety and risks.