The News

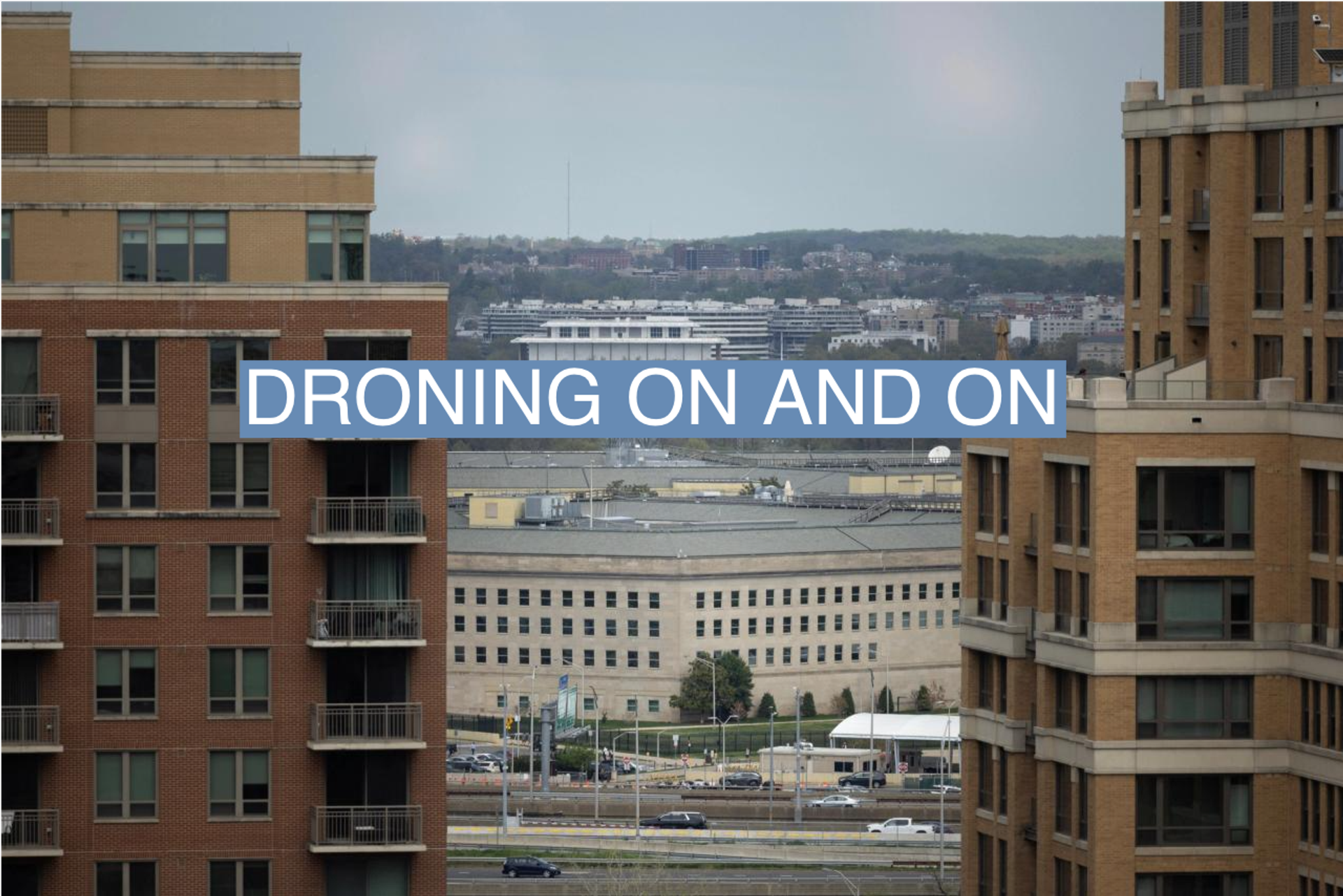

The U.S. Department of Defense has ramped up its efforts over the last year to develop AI-powered autonomous weapons that promise to change the face of warfare, and officials argue they are key to bolstering the country’s military defenses against rival powers such as China and Russia.

Autonomous weapons systems — labeled by critics as slaughter bots, killer drones, or killer robots — use artificial intelligence to seek out and kill targets with less human intervention.

The war in Ukraine represents the “long-feared arrival of autonomous weapons in combat,” Defense One reported. AI interception systems are also being used to detect and thwart attacks — for example, by the Iranian-backed Houthis in the Red Sea.

But the arrival of such weapons has raised concerns among human rights groups, who question the ethics governing their deployment.

SIGNALS

The U.S. wants to craft international standards for building autonomous weapons

The U.S. has tried to foster international cooperation among countries about what rules should govern the use of autonomous weapons and limit the possibility of catastrophic error. No international treaties currently govern how such tools can be deployed, but the U.S. State Department last year published a political declaration on military use of AI, which has so far garnered 51 signatories, including many European nations, South Korea, and Australia. Last year, the Pentagon also revised its policy on the military’s development of AI weapons to ensure they are vetted by senior leaders. “We think of this as good governance so countries can develop and deploy AI-enabled military systems safely, which is in everyone’s interest,” said a senior Pentagon official at a talk hosted by the Center for Strategic and International Studies. “Nobody wants…systems that increase the risk of miscalculation or that behave in ways that you can’t predict.”

AI-powered weapons raise potential risks on par with the nuclear era

The U.S. and U.K. militaries must rapidly overhaul their weaponry for the AI age if they are to win a major war — moving budgets from traditional aircraft and surface ships to unmanned, expendable drones and robots, defense experts argued in a report published this month. But the Pentagon’s Replicator initiative — announced last August to rapidly scale up the use of autonomous weapons — has been criticized by Congress and industry experts for being underfunded and vaguely defined. Human rights groups, meanwhile, have sounded the alarm about an emerging AI arms race, and many remain unconvinced that the U.S.-led ethical guidelines will offer humanitarian protection against robot weapons, The Hill reported. AI-powered weapons are a poorly understood, novel technology that should be viewed as “on par with the start of the nuclear era,” one researcher at the Future of Life Institute told the outlet, adding that “if we view this in an arms race way, which is what the Pentagon is doing, then we can head to global catastrophe.”

Generative AI is also part of the DoD’s plans to improve military capabilities

The DoD is experimenting with generative AI, which can be used to help plan for attacks in a “multi-threat environment,” argued Graham Evans, a vice president at Booz Hamilton, in an interview with C3 AI. Earlier this month, ChatGPT maker OpenAI said it was reversing its ban on working with militaries to partner with the Pentagon. The company said it still was prohibiting its generative AI tech from being used to develop weapons, but it would begin working on projects related to cybersecurity and other areas.

Evans said that the U.S. intelligence community believes that China is likely to ramp up threats in the Indo-Pacific by 2027 or sooner, and given that the region is so vast, military planning involving generative AI will be crucial. Using machine learning to help make strategic decisions will be “paramount to successfully winning the environment,” he said. Troops have to synthesize large amounts of intelligence and information from the battlefield, and using generative AI could help reduce the amount of time and burden required for such tasks.