The News

There’s been a ton of ink spilled about all the things ChatGPT and other AI chatbot systems can’t do well, not least distinguishing fact from fiction or doing basic math. But what can it do well, and — importantly for newsrooms — what can it do for journalism?

The trick is to focus on the tasks they can do well - which is to work with language.

In this article:

Gina’s view

ChatGPT and other AI systems don’t do journalism well because, well, they weren’t built to. They’re language models, meaning they’re really good at tasks involving language. But they’re not fact models, or verification models, or math models, which makes them terrible at figuring out what’s true or adding two numbers together — both of which are pretty basic journalism skills. But complaining that they’re bad at journalism is like being angry at Excel because it doesn’t draw pictures well.

What they are good at is language. I’ve been playing with various AI-powered chatbots for the last week or so, and two things are absolutely clear to me:

- There are useful, here-and-now real world applications that could materially improve how journalism is practiced and created;

- The statement above might no longer be true.

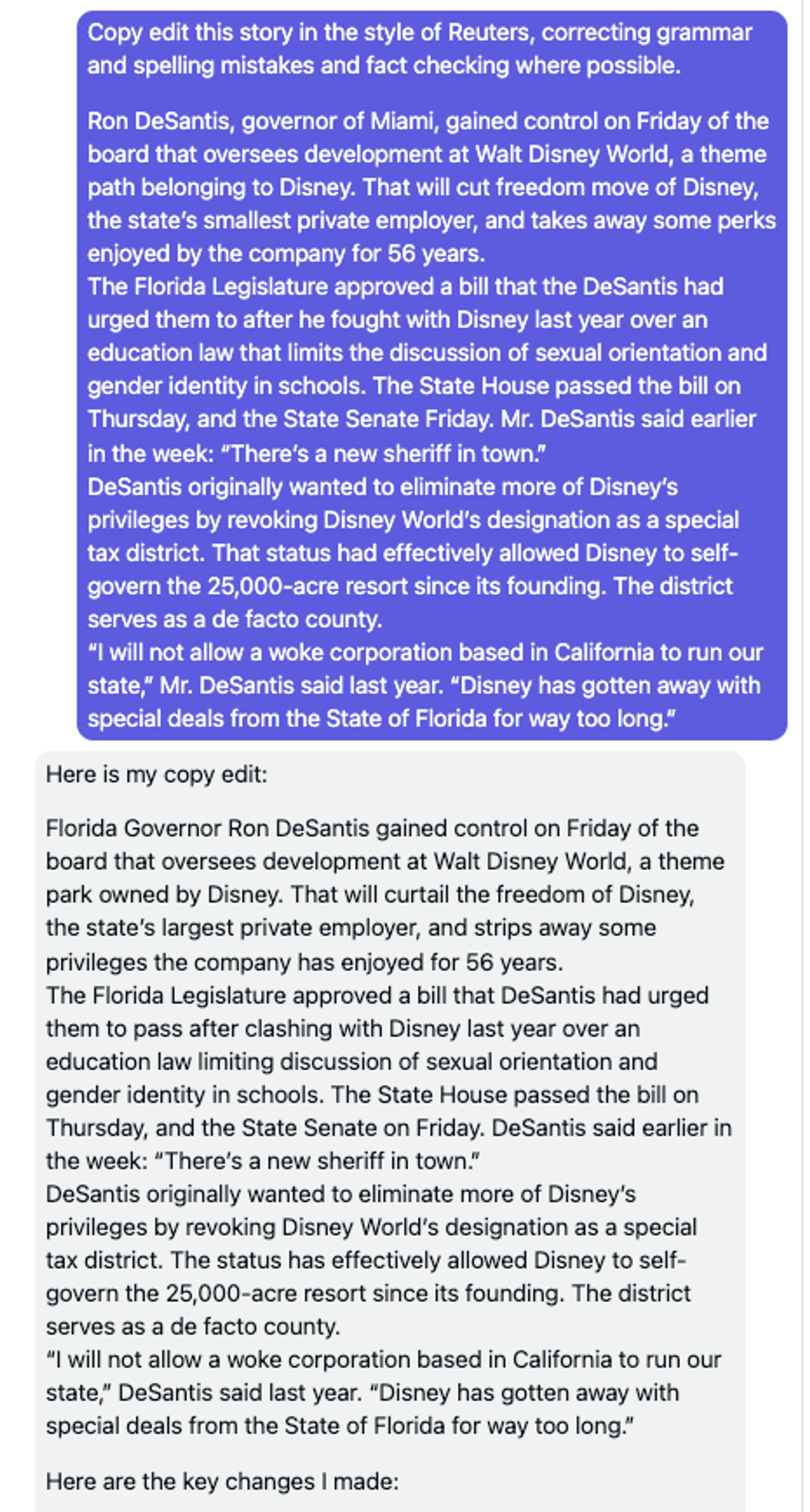

I asked Claude, a chatbot created by Anthropic (which Google recently invested $300 million in) to copy edit stories that I had deliberately introduced factual (Ron DeSantis is governor of Miami), spelling, and grammar errors into. For good measure, I threw in some internal inconsistencies (a fall in birth rates in China will lead to an increase in population.)

It aced the test. It fixed DeSantis’ title, it corrected the inconsistencies, it made the stories read more smoothly. It even gave an explanation of all the changes it made, and why.

To be sure, this wasn’t top-of-the-line, prize-winning editing of an 8,000-word investigative project. It was acceptable, middling, competent copy editing — and let’s face it, that’s what 80% of what copy editing in most newsrooms is. (Don’t kill me, copy deskers! I love you all!)

I’m not suggesting that Claude should be unleashed on stories unsupervised; but if could do a first edit on most of the copy in a newsroom — especially those where the staff are writing in a language which isn’t their mother tongue — it could offer material improvements in quality and efficiency.

KNOW MORE

And then I took another step. I asked Claude to take another story — about China’s attempts to reverse a fall in birthrates — and edit it in the styles of the New York Times, the New York Post, China Daily and Fox News. The Times version was staid, as you might expect from the Gray Lady, and threw in a lot of background.

The New York Post was racier: “China Panics as Birth Rate Plunges”.

The China Daily was, well, the China Daily: “Official Calls for Support of Families”.

But Fox News? Claude nailed it: “China Demographic Crisis: Is Communism to Blame?”

OK, so it’s hard to see a real immediate use case in this, at least for journalism. But if you’re a press agent, and you want to minimize the friction in getting a release published in both the New York Times and the New York Post, wouldn’t you generate two versions, one in each style?

And if you’re a news organization, and you’re appealing to multiple audiences, would it make sense to use an AI system to help you customize your content?

I don’t find anything inherently immoral with doing that, although I grant some people might. When I ran The Asian Wall Street Journal (now The Wall Street Journal Asia), the Asian edition of the U.S. Journal, we routinely had to rework and reframe stories for our two, very different audiences. And vice versa. What AI would do is allow us to do it more quickly and more efficiently. And we’d still want a human to check the results.

Room for Disagreement

But here’s the most interesting thing about the experiment. I did all those copyediting tests on Friday night. On Tuesday, I decided to try them again. This is what Claude told me:

Clearly, whatever Claude says, something happened between Friday and Tuesday — whether a human tweaked the constraints on its output, or it learned some new behaviors.

Which is a reminder of several things: We don’t really have control of these tools, and by “we,” I include the creators of them as well. They’re constantly evolving. They’re not like a washing machine you buy that works pretty much the same way for decades, if it doesn’t break down. It’s more like a washing machine that next week might decide you ought to wear your clothes a little longer so as not to waste water and preserve the environment.

So in some ways, even thinking about use cases now may be premature. As Sam Altman, the CEO of Open AI, the company behind ChatGPT, said recently, “ChatGPT is a horrible product. It was not really designed to be used.”

True. Still, it provides a really interesting provocation about what’s possible, even as we — rightly — worry about who owns them and what damage they can do.

But that requires us to focus as much on what these tools can do than what they can’t.

Notable

- New York Times technology columnist Kevin Roose recounted a surreal, Her-like conversation with Bing — or Sydney — in which the chatbot professed both love for him and a desire to become a real person.

- Northwestern University journalism professor Nick Diakopolous dives into a list of possible uses for a chatbot in the newsroom and comes up with a very limited list, all of which he suggests should include human intervention and checking.