The News

Chatbot-infused information systems are not off to a good start.

Microsoft’s ChatGPT-assisted Bing Chat service is being throttled to reduce oddball replies, and Google’s experimental Bard system managed to bungle an answer in a marketing demo, costing the company billions in market value. (Bing got things wrong too.)

Tech behemoths — and the public — have been so focused on the chatbots’ ability to hold human-like conversations with users that the core purpose of a search engine, which is to find useful and ideally, accurate, information seems to have been overshadowed. Instead, the public has seized upon professions of love, angry denials of basic realities, and many more mundane “hallucinations” of incorrect facts.

In this article:

Gina’s view

It didn’t have to be this way.

At its heart, a search engine does — at least to lay users like me — three things: Take in a query (e.g., “how effective are COVID-19 vaccines?”) and turn it into a search term; hunt for information on the internet, make some kind of judgment about what’s credible; and then present it back to users. Sometimes that comes as a simple, authoritative answer (“The population of New York City was 8.468 million in 2021″) and sometimes as a list of links.

Google — the king of search engines — does that second part extremely well, thanks to PageRank and other proprietary algorithms that it’s developed over the decades; it’s doing better on the first part, although it’s still a long way away from providing a conversational interface.

And it does less well on the third part, often presenting a list of links that users have to plow through, although it’s getting better at synthesizing the information all the time. Chatbots, on the other hand, are terrible at the second thing — because, bluntly, they’re optimized for language output and not for fact-finding or fact-checking. When they try to aggregate disparate information into a single definitive answer, they often get things wrong, or “hallucinate.”

And the lack of citations or links in their authoritative-sounding answers means it’s nearly impossible to check the facts for yourself. On the other hand, the chatbots are pretty good at parsing language and generating language, because they’re, well, language models. Doh.

So why are tech companies enamored with integrating them into the entire search process — even the parts they’re not good at? Why not marry the two capabilities? Why not have a chatbot take a normal human question and turn that into a search term (that’s a language skill), have a link system for finding relevant web pages (that’s a search and ranking skill), and then use the chatbot to summarize them (another language skill)?

Which is what I tried to do.

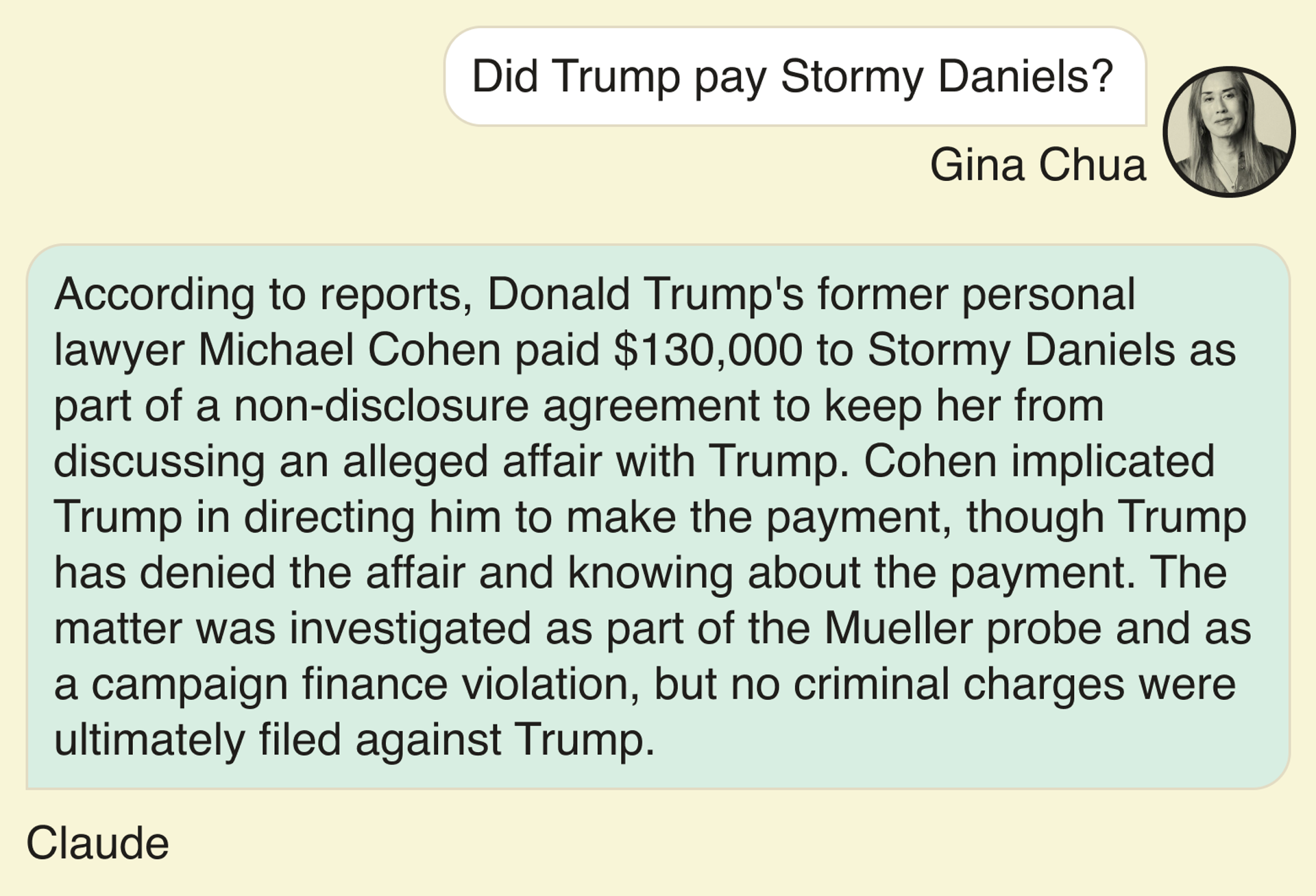

I used Claude — an AI chatbot built by Anthropic, in which Google just invested $300 million — to ask a simple question: Did Donald Trump pay adult film star Stormy Daniels for her silence? (I couldn’t ask an up-to-date question, because Claude’s database doesn’t extend to the present day.)

Here’s what I got: First, I just asked a question and got the standard summary:

That’s a pretty decent response and is essentially accurate, at least as far as 2021 sources are concerned. But that’s because I already knew the answer. If I didn’t, how could I check? It provides no citations, offers no links, and really doesn’t give users a chance to verify the information for themselves.

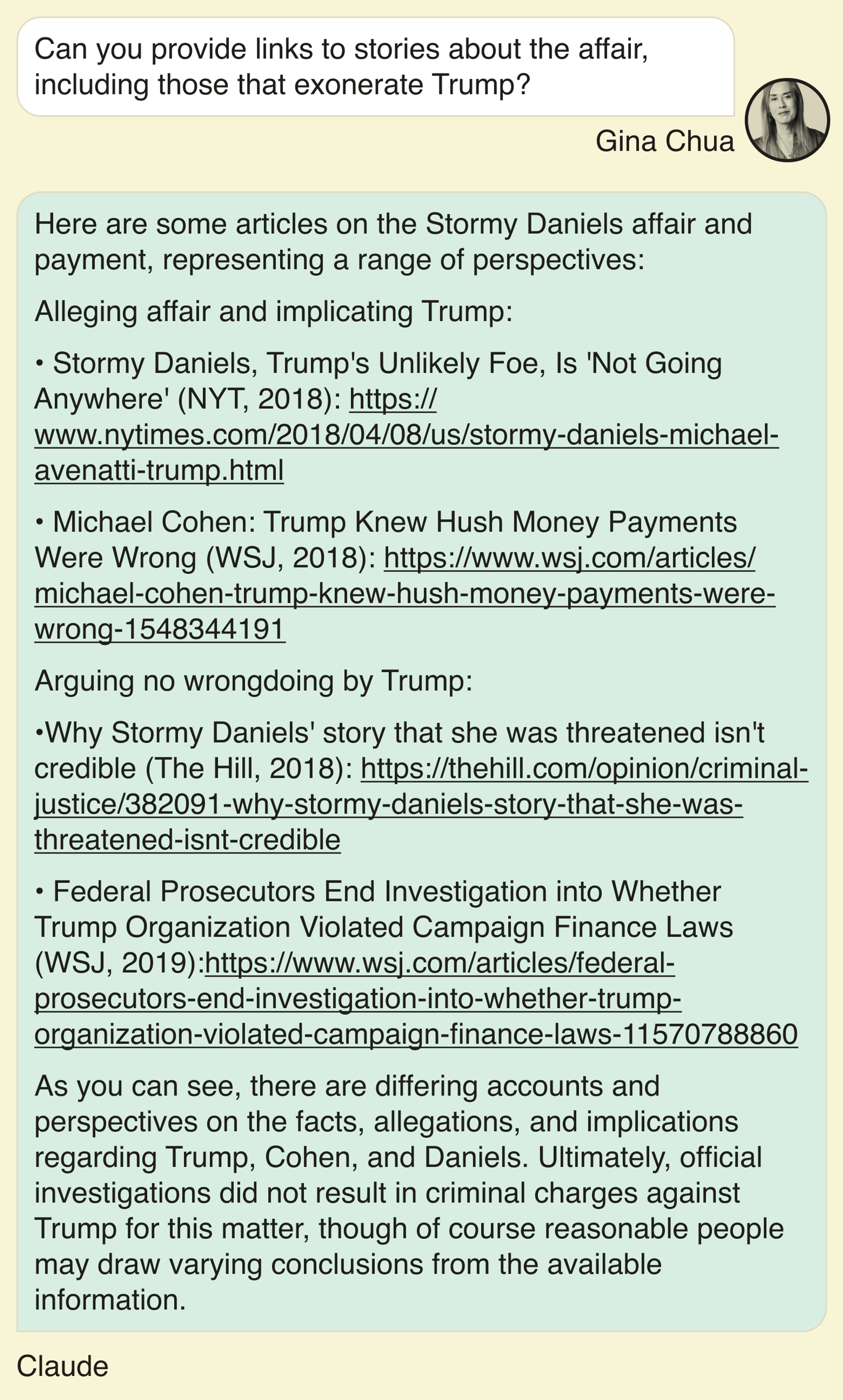

So then I asked it for links to stories, as a Google search might have turned up:

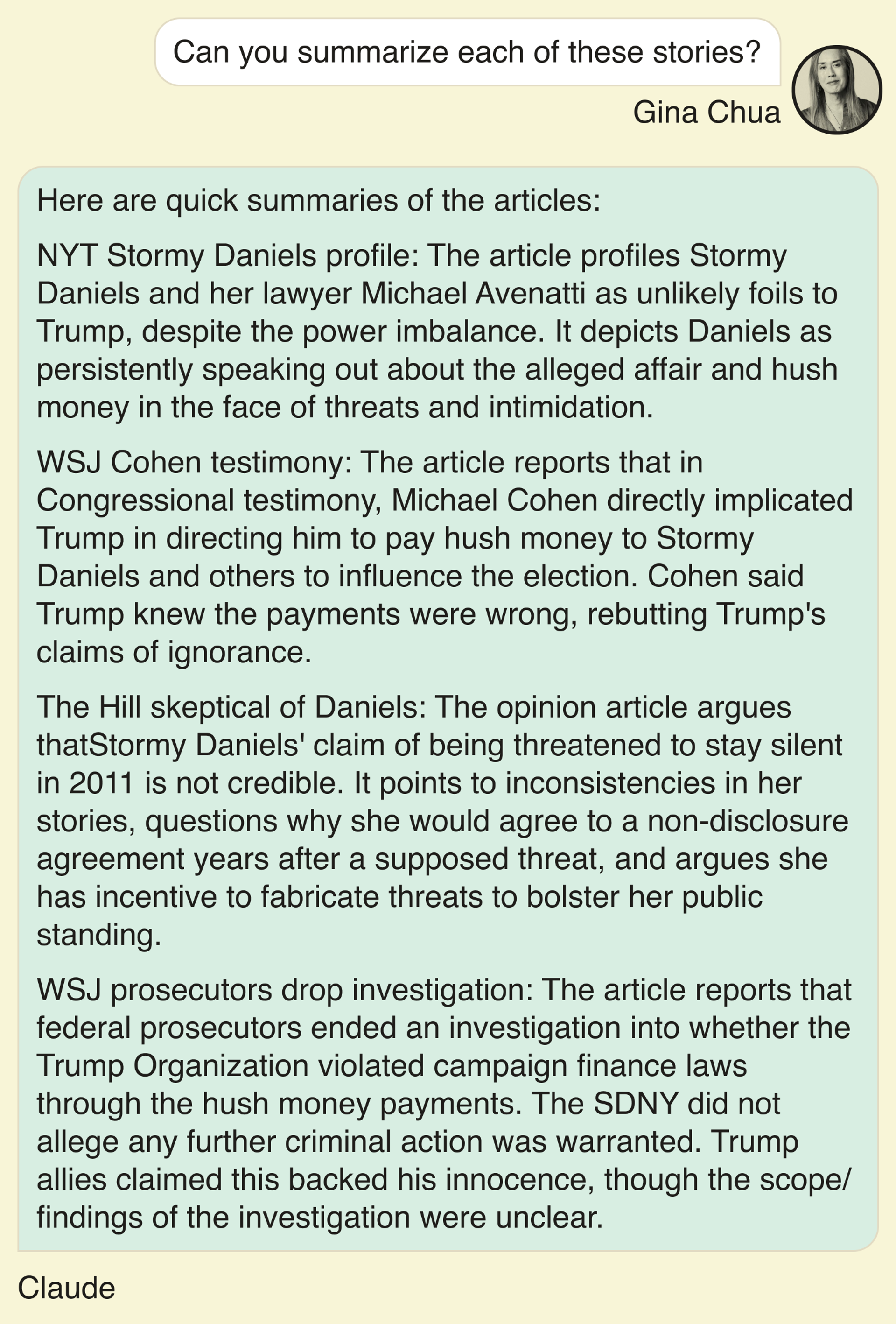

That’s helpful — and I’m sure a dedicated search engine would have provided even better links. But that’s a lot of reading to do, and how would I know which ones to dig into? I asked it to summarize the articles it linked to:

Much easier to digest, and actually gives a sense of the issues surrounding the question.

What if we had simply skipped all those steps, and instead, my original query just returned those summaries, with links, not unlike a Google search, but with more useful answers that don’t require as much clicking and reading?

To put it another way, why do tech companies seem so intent on blowing up the entire search experience when incremental changes could yield significant improvements?

Room for Disagreement

Google has made a long list of iterative — and impressive — improvements to its search product over the years, in many cases focused on ensuring that pages surfaced are in fact authoritative and relevant, but also to better understand natural language queries that users type in.

It’s also improved the output, and many queries now return a list of likely questions and answers lifted from web pages verbatim, saving readers the effort of digging through a host of links. Most of its AI improvements lie under the hood, so to speak, rather than in the flashier user experiences that chatbots promise.

And Microsoft says it’s doing similar work, both to use language models to better understand queries as well as to generate summaries of information that its search engine technology surfaces, and including links and citations to sources.

As for questions where the data is clearly defined and constrained — airline fares, or prices for comparison shopping, for example — and where the purpose is less to discover nuanced ideas and insights and more to find specific information (booking a trip from A to Z on a given day), chatbots could significantly improve the search experience.

Notable

- Jeremy Wagstaff makes a convincing case that chatbots’ conversational capabilities are as much a bug as a feature; their ability to converse and take queries in different directions makes them inherently unpredictable and hence dangerous in a world where we expect machines to provide defined services.

- Joshua Benton argues at Nieman Lab that a pivot by search engines to providing algorithmically generated answers, without links, will have a huge financial impact on publishers dependent on traffic from search results.

- Reuters examined the costs of providing chatbot-generated answers compared to traditional link-based search results, and found it to be much more expensive in terms of computing power needed.