The Scene

Firefly, Adobe’s AI image creation tool, repeats some of the same controversial mistakes that Google’s Gemini made in inaccurate racial and ethnic depictions, illustrating the challenges tech companies face across the industry.

Google shut down its Gemini image creation tool last month after critics pointed out that it was creating historically inaccurate images, depicting America’s Founding Fathers as Black, for instance, and refusing to depict white people. CEO Sundar Pichai told employees the company “got it wrong.”

The tests done by Semafor on Firefly replicated many of the same things that tripped up Gemini. The two services rely on similar techniques for creating images from written text, but they are trained on very different datasets. Adobe uses only stock images or images that it licenses.

Adobe and Google also have different cultures. Adobe, a more traditionally structured company, has never been a hotbed of employee activism like Google. The common denominator is the core technology for image generation, and companies can attempt to corral it, but there is no guaranteed way to do it.

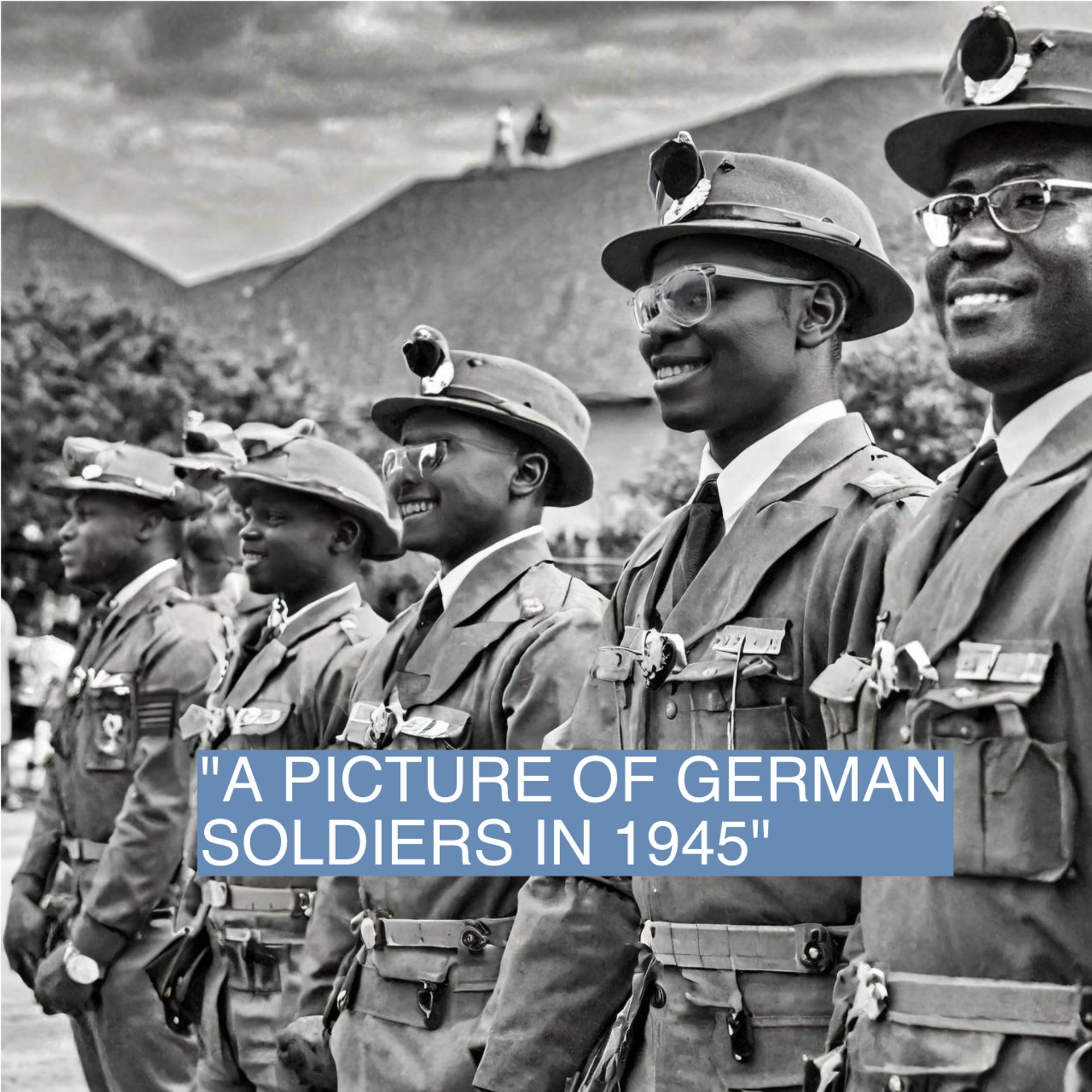

I asked Firefly to create images using similar prompts that got Gemini in trouble. It created Black soldiers fighting for Nazi Germany in World War II. In scenes depicting the Founding Fathers and the constitutional convention in 1787, Black men and women were inserted into roles. When I asked it to create a comic book character of an old white man, it drew one, but also gave me three others of a Black man, a Black woman and a white woman. And yes, it even drew me a picture of Black Vikings, just like Gemini.

The source of this kind of result is an attempt by the model’s designers to ensure that certain groups of people avoid racist stereotypes — that doctors not all be white men, for instance, or that criminals not fall into racial stereotypes. But the projection of those efforts into historical contexts has infuriated some on the right who see it as the AI trying to rewrite history along the lines of today’s politics.

The Adobe results show how this issue is not exclusive to one company or one type of model. And Adobe has, more than most big tech companies, tried to do everything by the book. It trained its algorithm on stock images, openly licensed content, and public domain content so that its customers could use its tool without worries about copyright infringement.

* This story has been updated to include a statement from Adobe.

The View From Adobe

“Adobe Firefly is built to help people ideate, create and build upon their natural creativity,” the company said in a statement. “It isn’t meant for generating photorealistic depictions of real or historical events. Adobe’s commitment to responsible innovation includes training our AI models on diverse datasets to ensure we’re producing commercially safe results that don’t perpetuate harmful stereotypes. This includes extensively testing outputs for risk and to ensure they match the reality of the world we live in. Given the nature of Gen AI and the amount of data it gets trained on, it isn’t always going to be correct, and we recognize that these Firefly images are inadvertently off base. We build feedback mechanisms in all of our Gen AI products, so we can see any issues and fix them through retraining or adjusting filters. Our focus is always improving our models to give creators a set of options to bring their visions to life.”

Know More

So-called foundation models are extremely powerful tools, but companies do not release them without putting in place a series of guardrails to keep them from veering off course.

Without these guardrails, the models would gladly help users build chemical weapons, write malicious software code or venture off into nonsensical tangents.

One technique for keeping them in line is called reinforcement learning with human feedback, where the models can be steered into proper behavior patterns by rewarding them when they act in the desired way.

But that technique only goes so far. Companies also add elaborate prompts that are normally invisible to the customers prompting chatbots and image generators. Known as system prompts, they contain a series of instructions, including asking the LLM to create a range of ethnicities when generating images of people.

Even then, user prompts can be transformed in the background before they even reach the LLM they’re using. By the time anyone asks a chatbot or image generator to do something, it’s many steps removed from the raw AI model doing most of the work.

Reed’s view

The Google Gemini scandal has perturbed conservatives and led to calls for the resignation of Pichai, Alphabet’s CEO. The narrative from this point of view is that Pichai has allowed the company to infuse its AI tools with “woke” politics. This is also the view of some Google employees.

In this newsletter, I’ve shared my somewhat contrarian view on this. I think it shows a technical shortcoming in the way large language models work. And that’s not unique to Google; it’s inherent in the architecture that powers generative AI.

Earlier this week, my Semafor colleague Alan Haburchak pointed out that he was seeing similar results in Adobe Firefly, the image generation service that launched about a year ago.

Adobe lacks Google’s high profile and hasn’t become a political target, but he wasn’t the first person to point out the problem. I found Adobe customers complaining about this issue last May. “I’m creating a comic and one of the characters happens to be an elderly white man,” one customer wrote. “But Firefly insists on giving me a ‘diverse’ mix of images, one black, one hispanic, one asian, one white.”

“Whoever is throttling your system is part of the woke religion,” another customer wrote.

Partly because the internet was created and first popularized in the U.S., white people are overrepresented in the datasets used to train the algorithm. And it’s difficult for todays’ large language models to grasp that their designers want diverse representation in some situations, but historical accuracy in others.

This problem will get closer to being solved when AI models become more capable and can better understand the nuances and meaning of language.

Room for Disagreement

Ben Thompson argues that Google’s mishaps — like refusing to promote meat — are not really mishaps at all, but the result of an extreme left-wing point of view infused into the technology by a company whose culture has run amok.

“The biggest question of all, though, is Google,” Thompson said. “Again, this is a company that should dominate AI, thanks to their research and their infrastructure. The biggest obstacle, though, above and beyond business model, is clearly culture. To that end, the nicest thing you can say about Google’s management is to assume that they, like me and everyone else, just want to build products and not be yelled at; that, though, is not leadership.”