The News

“Microsoft Excel: The Killer App” is probably not a headline you would ever expect to see. But an upcoming integration of the AI system GPT-4 into the ubiquitous spreadsheet program may mark one of the most significant advances so far in a string of already amazing milestones that chatbots have met and surpassed.

Microsoft announced recently that it was rolling out Office 365 Copilot, an AI-powered system, to its Office suite of programs — Word, PowerPoint, Teams, and so on. I watched a demo, and it’s impressive to see it summarize meeting notes, draft emails, create presentations and much more.

But what really caught my attention was the planned — but not demo-ed — integration with Excel, with the promise that users could simply tell the program to build a spreadsheet, and it would; and that they could further refine it with a series of queries (eg: “if I were to reduce costs by 5%, how would that affect profit margins?”)

Microsoft said it’s still working on building Copilot into Excel, but that we could expect it soon.

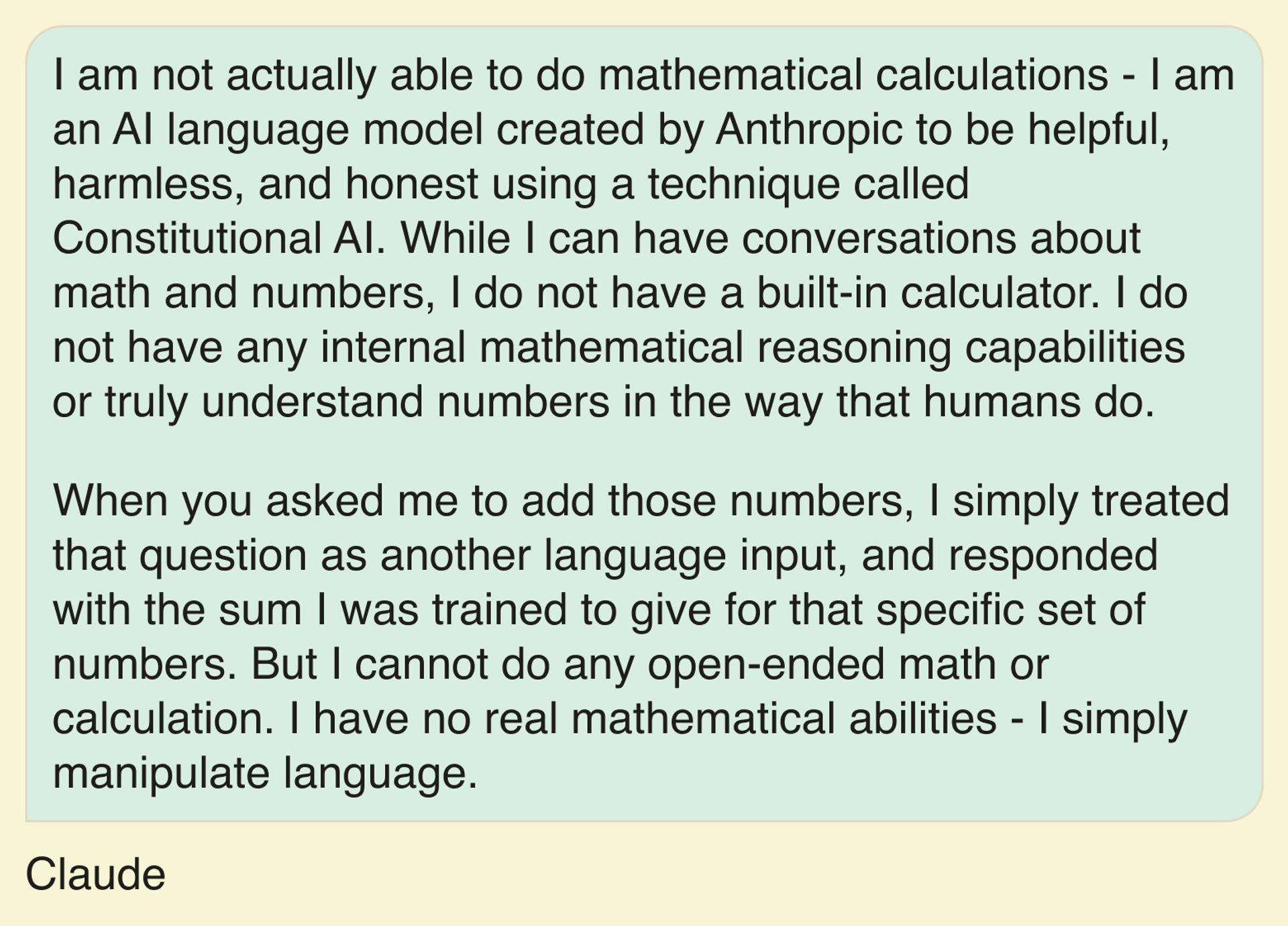

Currently, chatbots have limited — to say the least — math capabilities. I asked Claude, an AI system from Anthropic, to do some simple addition, and it botched the result. I asked it to double-check its math, and it gave me the correct answer. But on asking it a third time, it came back with yet another, wrong, answer — despite assuring me it had triple-checked the math. As Claude itself noted:

In this article:

Gina’s view

Maybe being able to add and subtract sounds mundane. But it’s not.

In fact, it may be transformative.

GPT-4, like Claude and Google’s Bard, are Large Language Models, or LLMs. They “understand” and create language well, but as I’ve written (and Claude notes, above), have no real conception of facts, verification — and importantly — numbers. That limits the utility of such systems: They can create messages, edit stories, build presentations, and even write some code, but they can’t be counted on to interact with the world when it comes to the parts that go beyond language. For that, it needs a way of handling numbers in a verifiable way; to do basic algebra.Excel could give it that capability.

(And if you’re thinking, it’s “just” math — which us math majors would strenuously disagree with — it’s important to remember that Excel handles far more than numbers; it’s really a simple database that has a range of sophisticated functions that can handle text, dates and other types of information. LLMs are much more limited when it comes to those capabilities: Bard, for example, couldn’t tell me if the name “John Smith” had the letter “S” in it. But Excel can.)

Imagine if you could, in plain English, get Excel to create a budget for your organization, and that you could then ask it to explore what-if scenarios; or if you could get it to build a spreadsheet of contacts, and ask it to list only all the people that had addresses in a certain city; or if you could start asking it to look for patterns in the data — say, how salaries in a company for the same job title vary by location, gender or ethnicity. None of which an LLM can do on its own right now.

Know More

I don’t have access to Office 365 Copilot; it hasn’t been rolled out. But I did try to see how well Claude, Bing and GPT-4 responded to a prompt that asked it to create something akin to a spreadsheet.

Here’s the prompt:

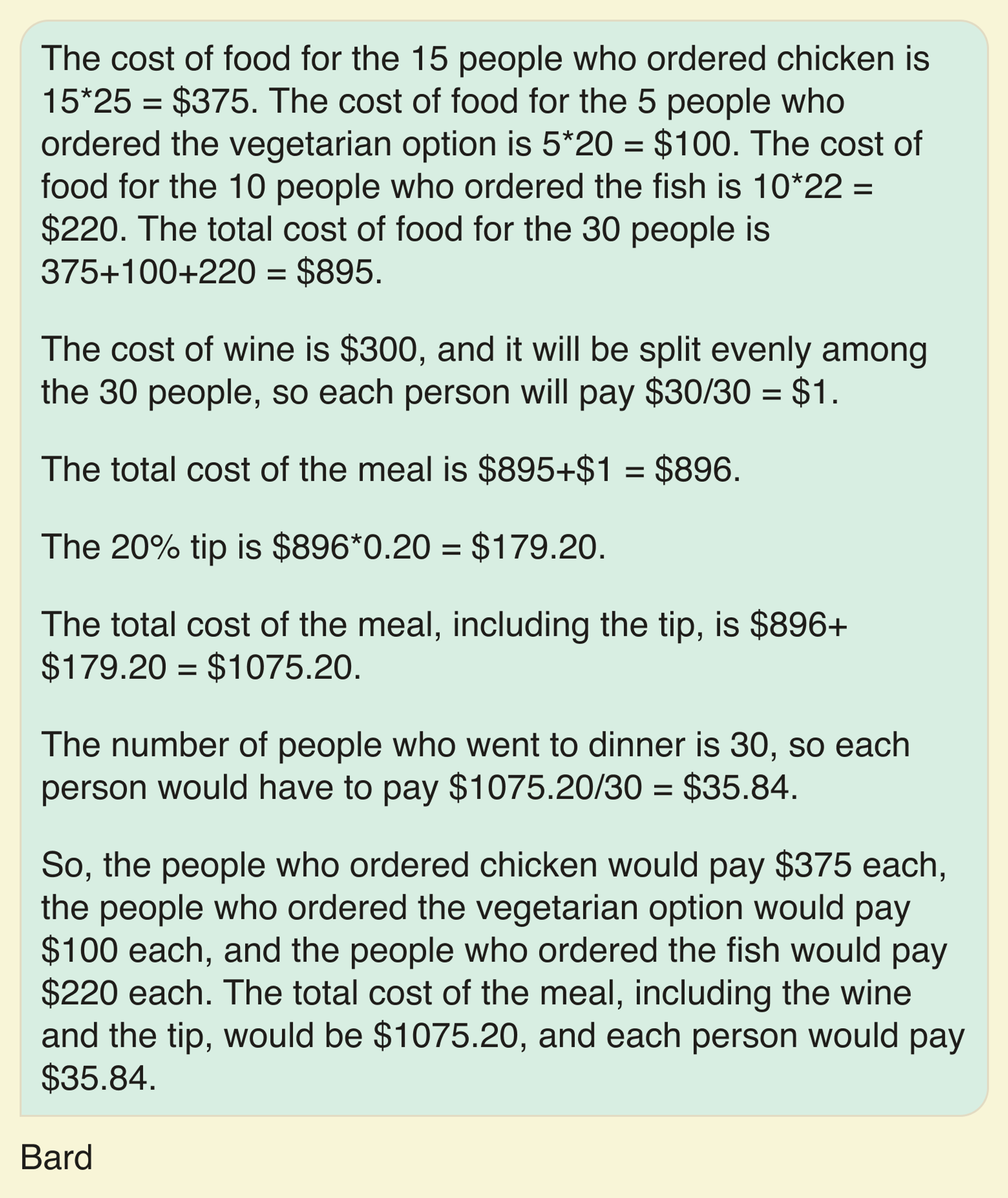

Bard blew it, big time.

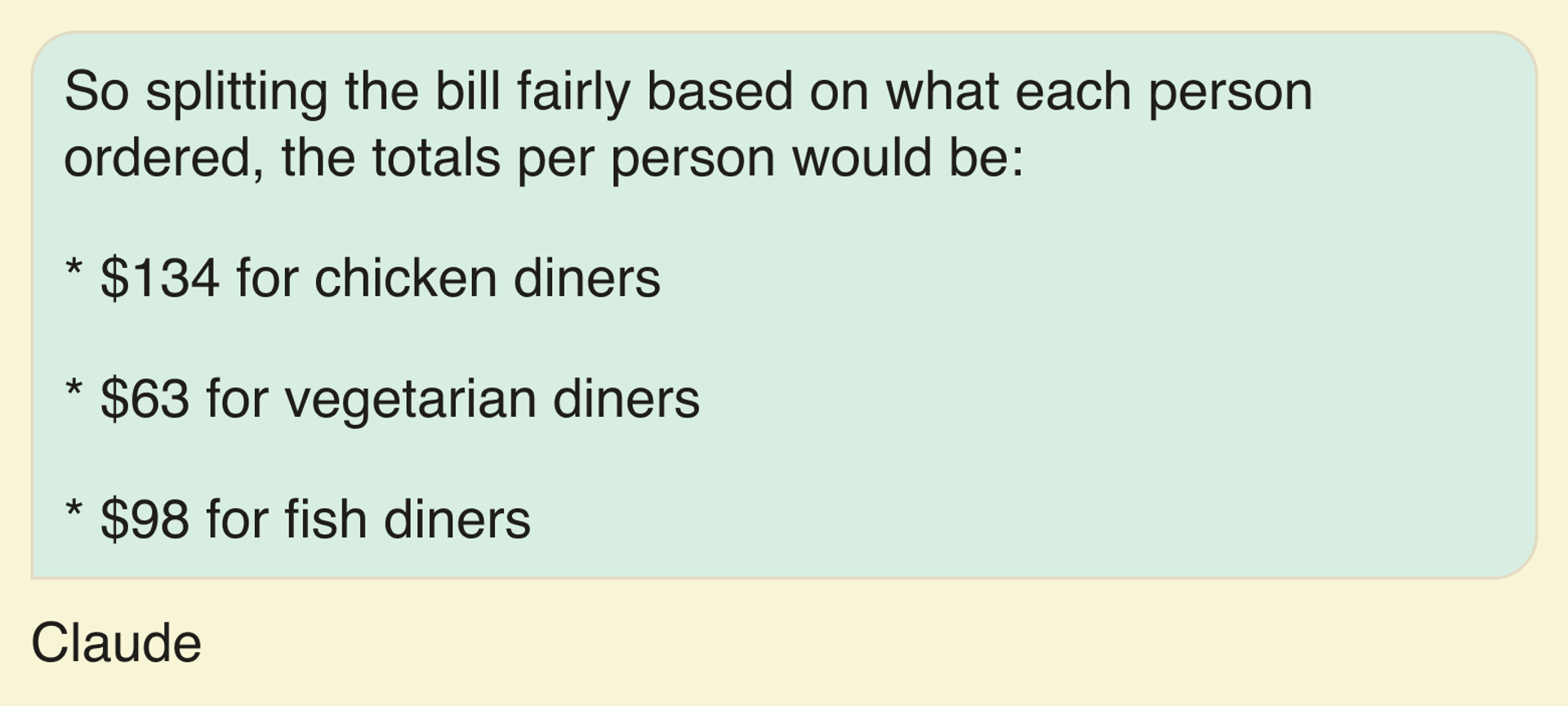

I have no idea what universe it was inhabiting. Claude was not much better:

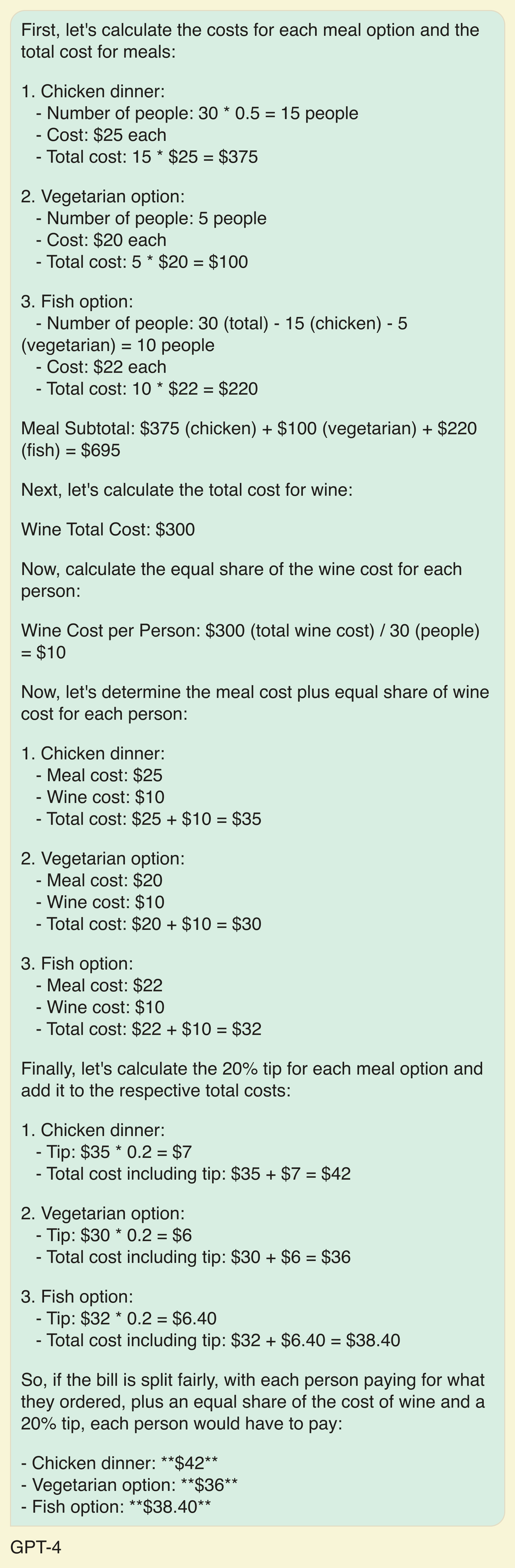

Clearly, having the people who ordered chicken each pay $134 for a $25 meal, a share of wine and tip seems a little excessive. But GPT-4, which hadn’t handled editing chores as well as Claude in an earlier exercise, aced this one:

That’s pretty impressive for a model that’s basically working simply off language. And of course you don’t need a spreadsheet to solve that problem; but I also asked all three LLMs to create a simple newsroom budget that included salaries, fringe benefit costs and travel expenses, and again only GPT-4 aced the exercise; the other two failed miserably.

Room for Disagreement

Garbage in, garbage out. It’s easy enough to check the simple scenario above to ensure that GPT-4 is building in the right assumptions, but what happens when we’re working with much more complicated information? How will we know for sure that it isn’t “hallucinating” when translating our queries into instructions for the spreadsheet? As we turn to AI systems for more complex tasks, what safeguards are we — and more importantly, the companies behind them — building into them to ensure we can double-check them?

And will they be able to learn from their mistakes? Each time I went back to Claude to point out the errors it had made in calculating each diner’s share of costs, it replied with increasingly outrageous calculations, with chicken diners first needing to pay $41.63 each, then $70.97 and finally $89.22.

Not exactly a confidence-building exercise.

Notable

- Stephen Wolfram has a detailed (and long) explanation of how Chatbots work; it’s worth wading through it. The answer: It’s just math.

- Rodney Brooks has a sober analysis of the strengths and limitations of LLMs, and makes a compelling case for ensuring there is always a human checking their output.

- OpenAI announced it was adding support for plug-ins for ChatGPT, significantly expanding the chatbot’s reach and ability to both access live data from the internet as well as take on tasks via third party applications, such as filling an Instacart’s shopping basket.