The Scoop

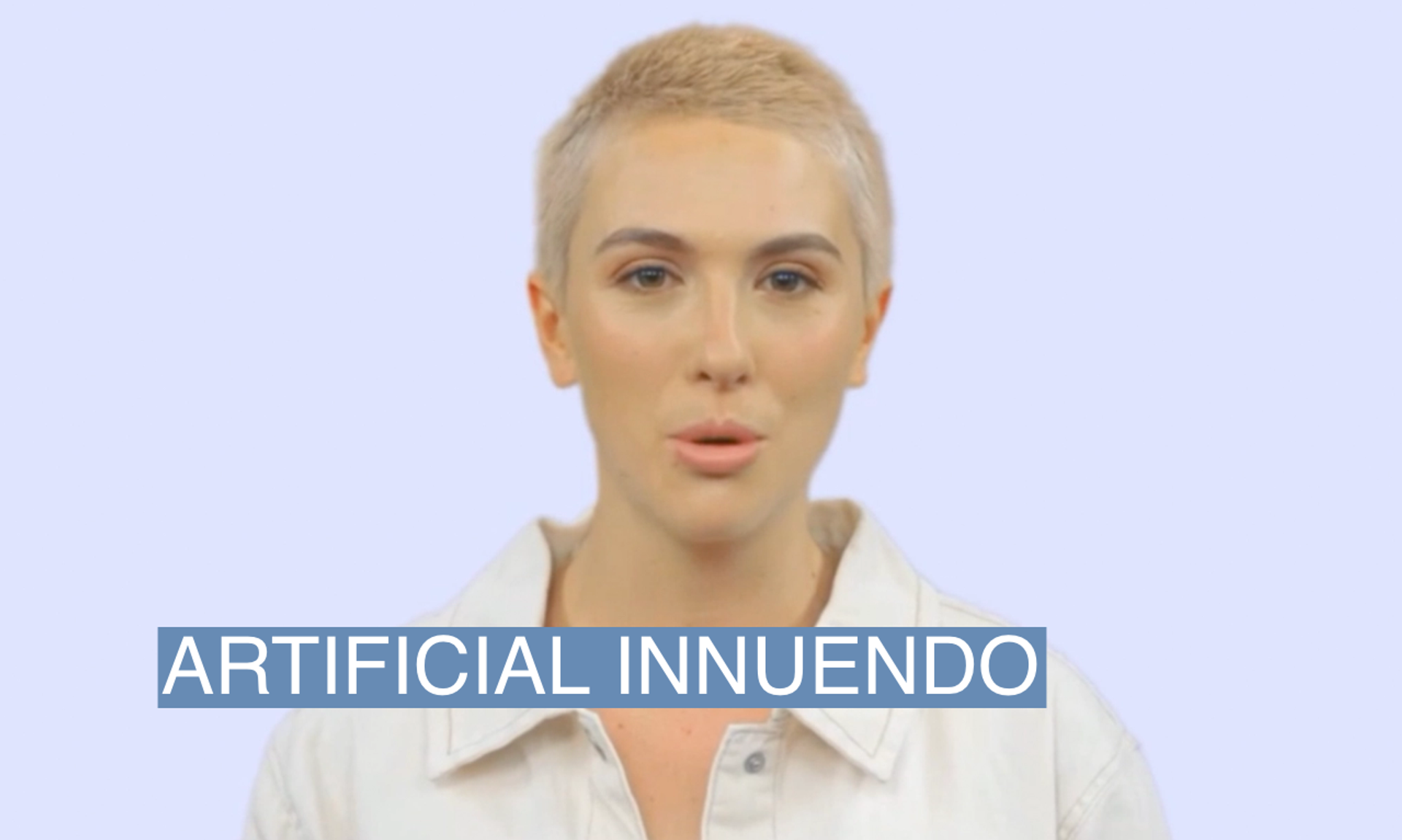

Bubbles, a San Francisco-based company that is sort of like Slack but with video messages, offered users a way to talk to ChatGPT by speaking with a human-looking avatar.

There was just one problem with the free service: The real humans conversing with the blonde, female character tried to make it act inappropriately.

The messages weren’t explicit but used sexually-suggestive innuendo. OpenAI, the company that runs ChatGPT, has its own content filters, but users circumvented them through euphemisms and asking the avatar to simply repeat what they typed.

“She asked me to send you a message that she likes painting with you a lot, especially with that thick … brush of yours! Wink wink,” one user asked the avatar to say.

The service works by converting a user’s voice into text and sending it to ChatGPT. Then it feeds the response into Synthesia, which powers the human-looking avatar. (See it in action on our TikTok or Twitter)

Synthesia abruptly cut off Bubbles’ access to the service, Bubbles co-founder and CEO Tom Medema said in an interview with Semafor. Synthesia told Bubbles it had been barred from using the service after Synthesia’s content moderation team had flagged inappropriate messages from Bubbles users.

“Our AI avatars are based on real human actors, who would likely not be comfortable with their likeness being used in this way,” a Synthesia content moderator wrote in an email to Medema.

Bubbles’ access was later reinstated after the CEOs of the two companies discussed the matter.

In this article:

Know More

With the help of ChatGPT, Bubbles was able to create a product that would have been science fiction just a couple of years ago: An artificial intelligence chatbot capable of conversing in spoken language.

The technology still has some technical hurdles to overcome before it’s totally seamless. It takes several minutes for Synthesia to create the AI-generated videos from the written ChatGPT responses, and the intonation of the AI characters is not perfect. It’s clear you’re talking to a robot.

But those hurdles seem like the kind that will be quickly overcome.

After the incident with Synthesia, Medema created an extra layer of content moderation by routing all responses from ChatGPT through the service a second time, asking it to flag inappropriate content.

In essence, ChatGPT is now monitoring itself.

Synthesia does not create deepfakes, misleading content such as videos that impersonate individuals, without permission. All “synthetic” humans offered by Synthesia are based on real people who have given consent.

“Synthesia has been focused on safety since day one,” the company said in a statement. “Our moderation system worked as intended in the Bubbles case, and the inappropriate videos in question were never allowed to be generated or published.”

Reed’s view

The world is going to witness an explosion of new companies organized around “prompts” of these massive AI models — not building AI itself, but figuring out ways to turn existing services into profitable new products.

We aren’t there quite yet, but the ability to create digital avatars indistinguishable from humans will upend entire industries, from acting to marketing.

I’m told major celebrities are already selling their likenesses to brands so that they can star in commercials without showing up on set. People will be able to exist in two places at once.

Actors may also no longer have to look a certain way to get a part: AI is the ultimate makeup. Eventually, some roles might be replaced altogether by computer-generated acting.

Some people will abuse the technology to spread false information or offensive content, as Bubbles learned. But I’m convinced the new crop of companies using AI will be more conscientious about its pitfalls than the entrepreneurs of Web 2.0 and mobile. For the most part, they know they have to behave, or risk being shunned or targeted by policymakers.

That is not to say there won’t be problems caused by AI. Every new technology has downsides and AI will be no different. But there’s a good chance problems will be caught before they spiral too far out of control. And that’s thanks largely to the fact that so many people are paying attention.

Room for Disagreement

Independent researchers found Synthesia had been used to create pro-China propaganda, according to The New York Times, which argued the technology is already wreaking havoc on political landscapes around the world. The company immediately revoked access from the creators of the videos.

“With few laws to manage the spread of the technology, disinformation experts have long warned that deepfake videos could further sever people’s ability to discern reality from forgeries online, potentially being misused to set off unrest or incept a political scandal. Those predictions have now become reality,” it reported.

Notable

- This Forbes article from a few years ago predicted that deepfakes will wreak havoc on society and gives an overview of the technology and issues.