The News

ChatGPT creator OpenAI has been using its most advanced large language model to enforce the company’s content policies, marking a major milestone in the capabilities of the technology, the firm revealed Tuesday.

Lilian Weng, OpenAI’s head of safety systems, said in an interview with Semafor that the method could also be used to moderate content on other platforms for social media and e-commerce. That job is currently done mostly by armies of workers, often located in developing countries, and the task can be grueling and traumatizing.

“I want to see more people operating their trust and safety, and moderation [in] this way,” Weng said. “This is a really good step forward in how we use AI to solve real world issues in a way that’s beneficial to society.”

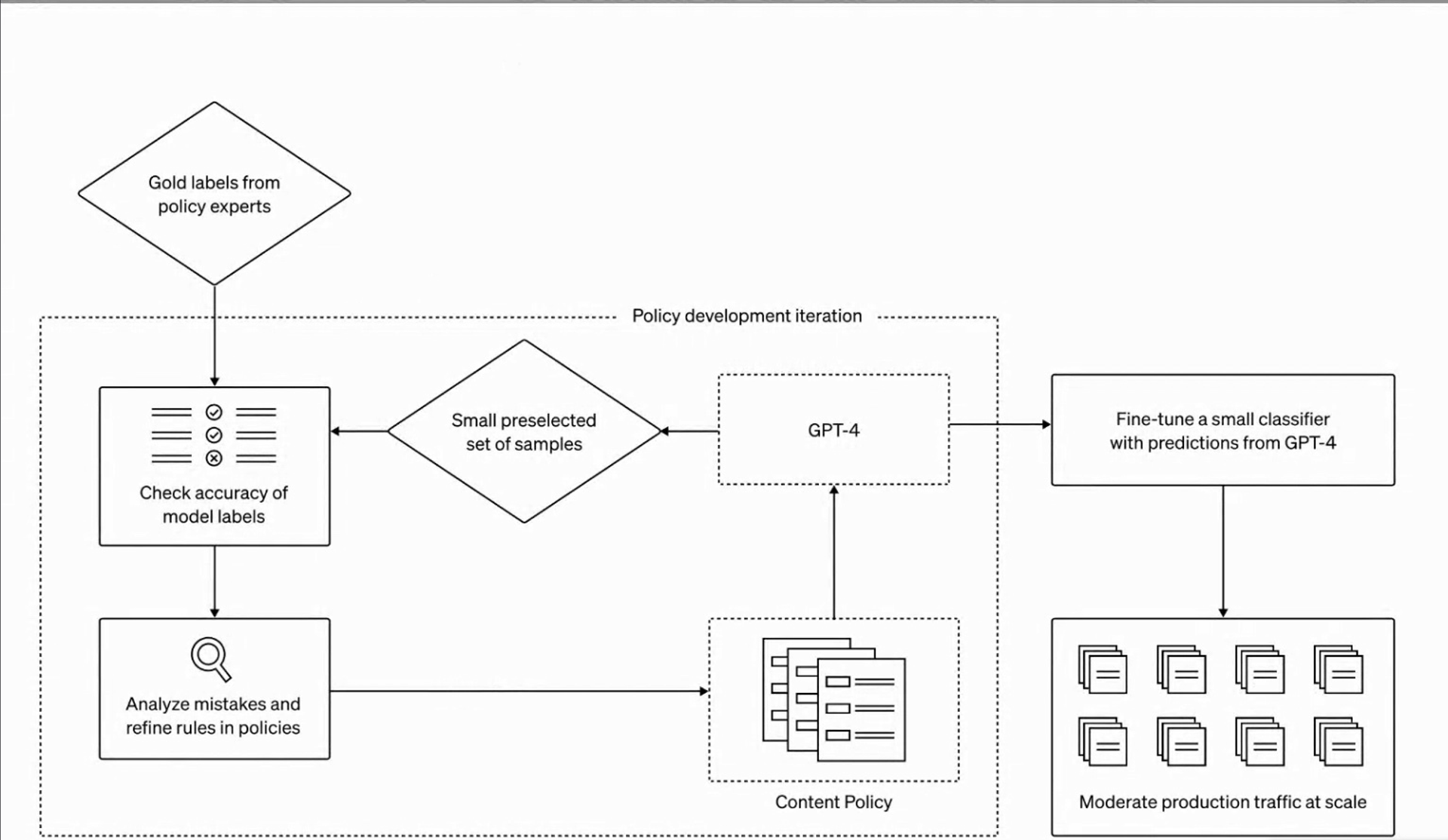

The method OpenAI used to get GPT-4 to police itself is as simple as it is powerful. First, a comprehensive content policy is fed into GPT-4. Then its ability to flag problematic content is tested on a small sample of content. Humans review the results and analyze any errors. The policy team then asks the model to explain why it made the errant decisions. That information is then used to further refine the system.

“It reduces the content policy development process from months to hours, and you don’t need to recruit a large group of human moderators for this,” Weng said.

The technique is still not as effective as experienced human moderators, OpenAI found. But it outperforms moderators that have had light training.

If the method proves successful on other platforms, it could lead to a major shift in how companies handle problematic online content, from disinformation to child pornography. Weng said OpenAI is also researching how to expand the capabilities beyond text to images and video.

An OpenAI spokeswoman said the company knows of some customers already using GPT-4 for content moderation, but they did not give permission to be named.

In this article:

Know More

Content moderation has been a vexing problem for OpenAI and other purveyors of AI chatbots. When ChatGPT was released last year, users put the content controls to the test, eliciting embarrassing or inappropriate responses and posting them to social media.

The most extreme examples came from users who were able to “jailbreak” the chatbot to get it to ignore its moderation policies, showing what the service might look like without them. A Vice reporter, for instance, was able to jailbreak ChatGPT and then coax it into describing detailed sex acts involving children.

OpenAI has been closing those “jailbreaking” loopholes and the incidents have become less common.

The company faced more criticism for its efforts to block offensive content than the controversial material itself. A Time investigation found that OpenAI paid workers in Kenya to help label offensive content so that it could be automatically blocked before it reached users. According to the magazine, workers were shown text describing “child sexual abuse, bestiality, murder, suicide, torture, self harm, and incest.” Some workers reported being traumatized.

Semafor reported last year that OpenAI was still using traditional methods of content moderation, and employed an outside firm to scan images produced by DALL-E before they reach users.

Weng said the previous version of its large language model, GPT-3, wasn’t powerful enough to reliably moderate itself. That capability emerged in GPT-4 roughly a year ago, but before it was released to the public.

While there will still be humans involved in the process — both to craft and constantly update policies, but also to help check edge cases — the new method will likely drastically reduce the number of people who do this work.

OpenAI acknowledges that ChatGPT’s ability to moderate itself won’t be perfect. And it was put to the test over the weekend at the DEF CON security conference, where hackers did their best to prompt ChatGPT and other large language models to produce restricted content.

“We can’t build a system that is 100% bullet-proof from the beginning,” Weng said. “At DEF CON, people are helping us find mistakes in the model and we will incorporate that, but I’m pretty confident it will be good.”

The method OpenAI is using for moderation differs from the “Constitutional AI” system used by Anthropic, a competitor founded by former OpenAI employees.

For Constitutional AI, a model is instilled with certain values that become the guiding principles for how the AI operates and what content it allows to be created.

Reed’s view

Automated content moderation has been one of the holy grails of the tech industry. Before the Cambridge Analytica scandal, companies like Facebook employed comparatively few moderators, betting instead that the technology would soon arrive that would allow them to automate the process.

That’s why it’s shocking that Facebook and Google didn’t figure this out first. It probably won’t be long before they come up with their own solutions.

Humans actually aren’t very good at content moderation. The policies are like legal textbooks. And even if an employee can remember every rule, there is a constant flow of content that falls into gray areas where the rulebook doesn’t give a clear answer.

If you want insight into how messy content moderation can be, just go back and follow the “Twitter Files,” Elon Musk’s attempt to uncover bias on his platform now known as X. The Twitter files show how content policies are fraught with ambiguity, which allows the political biases of individuals to seep into the decision-making process.

I wrote in 2019 about a lawsuit that alleged YouTube was discriminating against LGBT creators. One of the reasons was that content moderators from other countries flagged anyone who was openly gay as “sexual” content, muddying the algorithmic waters.

Using large language models to moderate content is a step forward, not because it will be perfect, but because it will be more consistent and less prone to human emotion and cultural differences.

And even if human-run content moderation is still a little better, there are still benefits in having a computer do it. It avoids having a person look at disturbing content all day as part of a job.

Room for Disagreement

Associated Press reporter Frank Bajak argues it will be difficult to ever rein in large language models like ChatGPT. “Current AI models are simply too unwieldy, brittle and malleable, academic and corporate research shows. Security was an afterthought in their training as data scientists amassed breathtakingly complex collections of images and text. They are prone to racial and cultural biases, and easily manipulated,” he wrote.

And while ChatGPT and other AI models are attacked for being too permissive, they have been and will continue to be criticized by conservatives for being too restrictive, or “woke.”

Notable

- Time detailed how GPT-3’s content controls were largely honed in Kenya, at great human cost. The article may have played a role in OpenAI’s decision to try to cut most humans out of moderating in the next version, GPT-4.