The Scene

Samsara, a company that helps businesses track and monitor assets like vehicle fleets and equipment, is using large language models to simplify the experience for customers and improve productivity.

Samsara Intelligence, which the company launched Tuesday, offers a glimpse at how LLMs, which have powered the explosion in artificial intelligence, are finding their way into swaths of industries beyond white-collar jobs.

Samsara has the sort of deep pool of data that’s valuable to a model: The roughly 10-year-old $30 billion company has been outfitting about 20,000 customers with its custom-built sensors for years.

For instance, its customers with vehicle fleets have been sharing information on maintenance schedules and error codes. Now, as AI has progressed, that data can be parsed in new ways. The models can be also be queried by the company’s customers in plain English — instead of scrolling through online dashboards to sort out vehicles in need of maintenance. In the future, the system could order maintenance automatically, rather than waiting for a human to request it.

Samsara uses a proprietary AI model to take the data and format it so that it can be more easily read by LLMs from companies like OpenAI.

And while the change isn’t revolutionary, it’s an example of how AI is beginning to lighten the mental loads of workers in subtle ways that may add up to real productivity gains for companies, potentially answering a central question hovering over the new technology.

Samsara CEO and co-founder Sanjit Biswas, who also co-founded the enterprise WiFi firm Meraki, acquired by Cisco for $1.2 billion in 2012, spoke with Semafor about the way AI has changed the company, both in expected and unexpected ways.

The View From Sanjit Biswas

Reed Albergotti: You’ve always been good at figuring out the bottlenecks in new technology trends, like with Meraki and wireless connectivity. It seems like we’re at a similar moment now with AI where it’s very cool, but there’s a lot of infrastructure that needs to be built to make it really useful. What are the bottlenecks now with AI?

Sanjit Biswas: It does remind me a lot about Wi-Fi 20 years ago, when John [Bicket] and I first started working on it. You use it for the first time and you’re like, “This is amazing.” The first time you opened a laptop and you could just sit at your couch and surf the internet, that was a really big deal and it became obvious that everyone is going to want this. But how do you make it happen?

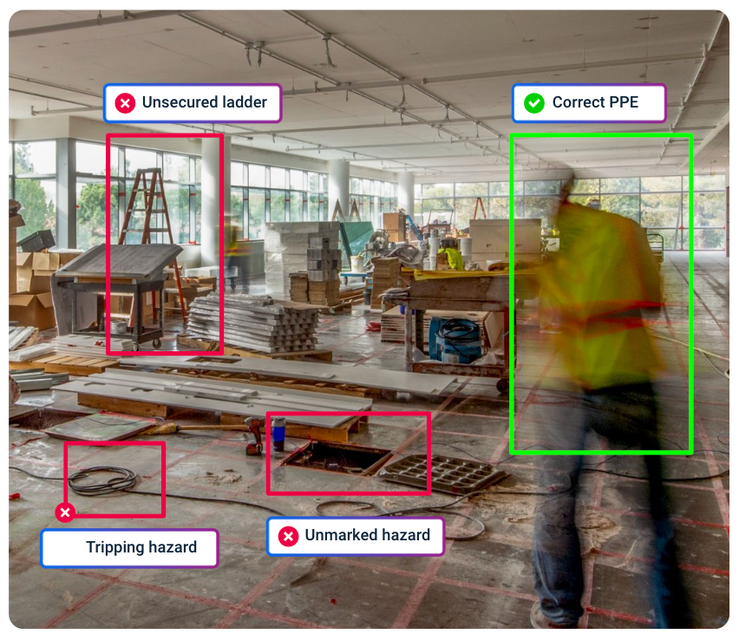

At the time, if you wanted to build a big Wi-Fi network, you kind of needed a Ph.D. in computer science. We’re seeing the same thing with sensors and AI. We have all gone through our ChatGPT moment. We know this is going to be world changing. But then, if I want to improve my risk mitigation at my construction job site or for my trucking fleet or something like that, there’s a lot of “how do we make this happen?”

The data needs to be really clean. It needs to be trained on data that knows the answer, you need on-the-road data. And then you need to provide the driver with some real time feedback: “Please put down your mobile phone,” or provide them with some kind of coaching and scoring. So that’s another bottleneck we’ve tried to break. We have the data. We have the insight. How do you take the action?

You put all of this hardware in the world. Do you see opportunities for edge compute?

We actually do a lot with edge compute and AI today. We have millions of these cameras that are deployed by fleets. They run AI inference at the edge in real time. Instead of having to wait hours for footage to go to the cloud and get analyzed, it tells you within a second, “Please put down your mobile phone,” or “You’re tailgating,” and it gives you real time coaching and feedback that’s all done with inference sitting at the edge. We use Qualcomm chips to do that. They’re very powerful compared to computers 10 or 20 years ago. These are like little super computers.

Are you able to run some of these newer transformer architectures?

We started with the kind of original convolutional neural network models that were state-of-the-art a couple of years ago. We’ve moved to a transformer-based architecture that helps us do a ton of things. We can actually detect all different kinds of risks. We can detect very complex cases, and it also lets us do more and more sophisticated detection. A recent one we rolled out was drowsiness detection. It turns out drowsiness can’t be detected with an image-based model. What you really want is a historic record and a transformer model that is trained on what happened five, ten, 30 seconds before accidents. There’s amazing insights around how people move as they’re getting tired. And so we trained a whole model based on that and rolled it out to those cameras.

I would have thought you’d need new hardware to run these models.

We put out probably more powerful hardware than we needed, because just based on our background, we saw the technology curve here. But the models have gotten way more efficient. We have a bunch of Ph.D.s that optimize these things constantly, and this is an area that’s probably underappreciated, because you see these Nvidia press releases on how many gigaflops or teraflops they can do. The models now are 10 times more efficient than they were about a year ago. It’s the same as Moore’s law, but happening in software with algorithms. That means this little embedded device can do what used to take a small data center before.

So are customers OK with paying for better hardware in anticipation of new technology down the road?

It’s provided with the license, so they don’t have to pay anything. We’re not a hardware company trying to sell you a new camera. That’s a subtle but important insight. For our customers, they don’t want to touch all of their equipment if they can avoid it. They prefer to just have software updates that happen over the air. Many of our customers hang out in the same hardware for five-plus years, and they’re very happy about that, because it’s like a software model instead.

That was a cost you had to eat up front. You saw the trajectory of AI. Can you go into more detail about what you saw that gave you conviction in that area?

Let me get our new employee handbook from 2017. We had this chart showing Moore’s law and the GPU chart. We saw that the GPU curve was happening. We were grad students that studied computer science before. These curves tend not to stop. They just compound and compound. Most people are good at linear approximation. It’s hard to understand what that looks like when it’s 2x every year. We did that analysis for chips, and also algorithms and the throughput an algorithm can get for a certain amount of energy and compute.

What are you looking at for the next generation? I know there are chipmakers out there who say they have the secret sauce to make amazing AI run in tiny little packages.

We certainly see exotic chips in the cloud, like Groq or Cerebrus. They’re all solving the same problem. It’s all part of the same technology trend, which is that AI inference is getting cheaper and cheaper. For example, those big large language models that came out with ChatGPT two years ago. Our estimate is it’s now 1/100th the price to run that model on a cost basis. That’s just in 24 months. These chips, they’re way more powerful, but they cost about the same as they did. So you get way more bang for the buck. At some point, that means we’ll be able to run even more models at the edge. We have a lot of headroom in our platform today, so we can just keep going with what we’ve got, but it might open up some new use cases. This applies to AI, but it also applies to connectivity. If you think about what’s happened with the phone in your pocket. If you got it in the late 2000s, it was probably 3G or something. You could get your email or text, you weren’t watching a video.

It’s almost the equivalent technology trend but for connectivity. I just don’t think the world’s talking about that as much. The cost per megabyte or gigabyte has just dropped massively. That opened up a lot for us in terms of what we can stream from the road, what we can stream from the field. And now we have tiny asset tags that can track almost anything. It’s like a fun size candy bar. It lasts four years on a battery. You get near-real time tracking of it. And it’s amazing. It’s not something you could have done 10 or 20 years ago.

What do you think of the low-Earth satellite explosion? I keep hearing there’s not enough bandwidth, and we’ll never get enough satellites up there.

Never say never. You’ll never get an electric vehicle to work beyond 100 miles. There’s Teslas everywhere, right? You’ll never be able to watch videos on your phone. This stuff just gets so good if there’s enough volume behind it. So yeah, we’ve certainly tracked Starlink. One of the things we announced at our customer conference: We have a small company we invested in called Hubble Network. We demoed on stage in June a tracker connecting to a low-Earth orbit satellite. It worked. We’re believers that eventually we will get to the point where you’ll be able to track things from space, and we see the technology working. It might be five to 10 years before it’s cost effective and mainstream enough, but I think Starlink has got a couple million users on it now. When you have something so useful, it just happens. The market starts pulling on it.

How does the world of IoT change when you can track anything from space?

The world is a big place. If you go up to Canada, there are parts of the Trans-Canada Highway that don’t have cell coverage, which is really hard to imagine. So I do think it’ll open up use cases where our customers are mining, or they’re building pipelines, or building roads. Those customers would love more connectivity. The idea of a lone worker being in the middle of nowhere with no one having any idea if they’re okay, I think that’ll be a foreign concept in 20 years.

Are your customers demanding AI and do they have ideas for what they’re going to do?

Our customers rarely ask for the technology. They’re not like, “Hey, can you give me a more powerful AI model?” But I’ll give you a customer example. Everyone knows Home Depot. They deliver a lot of appliances to people’s houses, so they have a big fleet. They wanted to reduce the number of accidents. They put our AI cameras on their fleet, and accidents reduced 80%.

They said, “This is amazing. How can we do more of this? What are we missing?” Now they’re working on idling reduction. The drivers will leave the cars running and it’s bad for emissions and fuel consumption. So that’s what they’re doing next. We go project by project.

Can you put sensors in the aisle so someone can help answer my home improvement question like in the old days of Home Depot?

We don’t do a ton in the retail environment, but I could imagine how that might change for us. The lumber yard or where you might have pipes, we might have a camera there that says, “Hey, Reed just walked into the lumber yard. He’s wandering around. He might get hurt.” Or you might know if someone tripped and fell. You could say, “A man in a gray shirt just fell around 3 o’clock. Tell me which site that happened in and pull the footage.” That used to be hours of watching video, and AI can do it super fast.

What are we all missing about this space?

We operate in a little bit of an underserved or overlooked segment of the world. But it’s big. It’s like 40% of the world’s economy, these physical operations industries. The thing that people don’t know is just how many people work in those businesses. I don’t think they’re going away anytime soon. We need roads, even with autonomous vehicles. It feels like this area has been sort of underappreciated, especially the tech world. And there’s a lot of interesting stuff that’s happening there.

You can see our growth rate. It’s 36% to 37% year over year. That’s only possible because there’s a trend here of digitization on a massive scale.