The News

Google unveiled a powerful new quantum computer prototype that compresses what would take today’s most powerful super computers ten septillion years (that’s more time than the universe has existed) to calculate into five minutes, the company announced Monday.

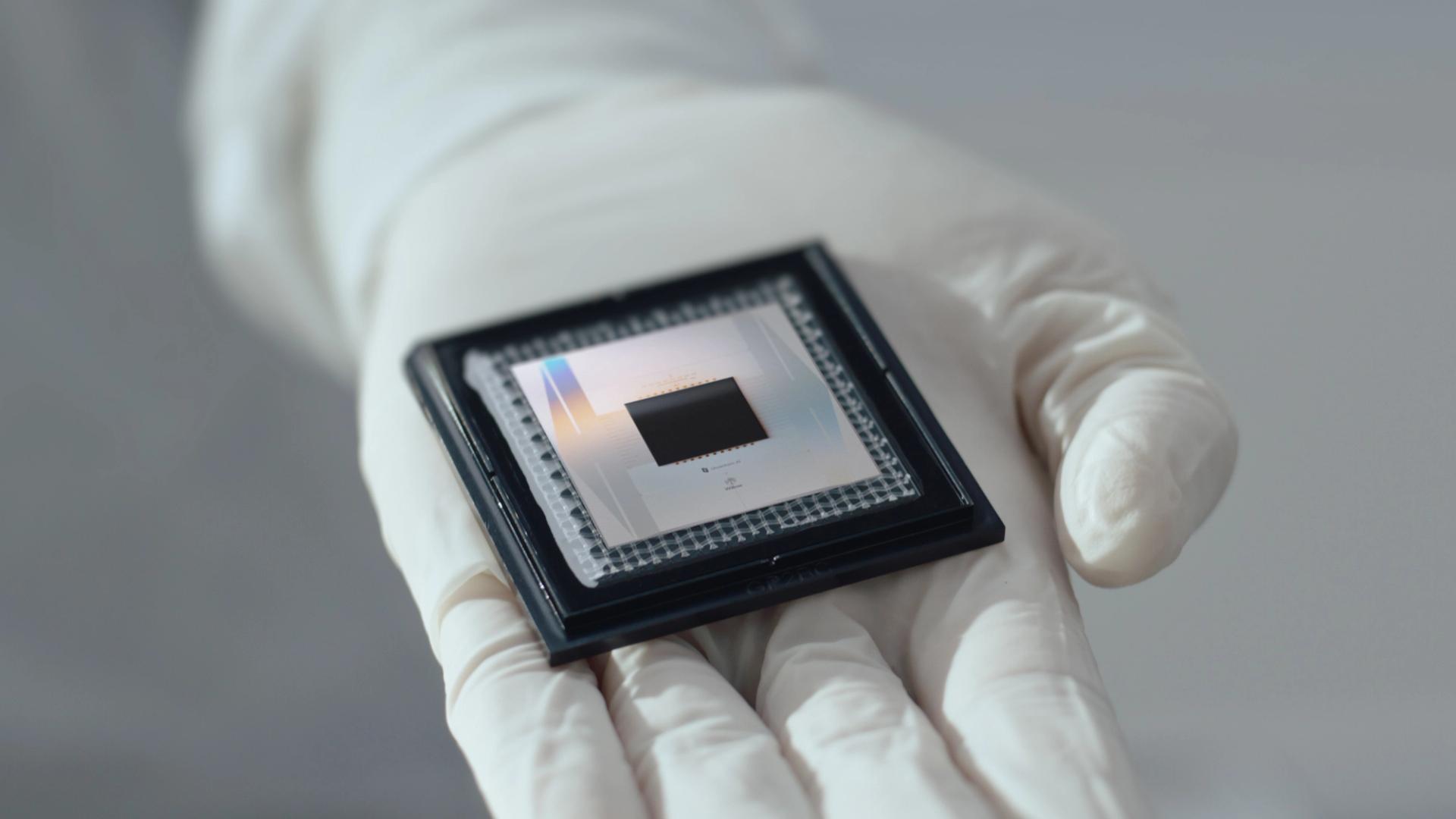

The new milestone is thanks to Willow, the company’s new quantum computing chip, which contains twice as many qubits, or quantum bits, as the previous model, called Sycamore.

The new chip turned out to be the gateway to a breakthrough in the quantum computing field, allowing the team to significantly reduce the number of errors made by quantum computers, potentially removing a major obstacle on the road from theoretical machines to practical devices capable of turbocharging scientific discovery.

Know More

Quantum computers are unlike the ubiquitous “classical” computers we use every day. Instead of doing complex math by manipulating lots of transistors that can switch back and forth from “one” to “zero,” quantum computers harness sub-atomic particles that have been forced into a quantum state.

These particles, or qubits, are not represented by binary numbers, but any combination of numbers simultaneously, a phenomenon known as superposition.

Superposition allows quantum computers to make different kinds of calculations that computer scientists would never ask of a classical computer under practical circumstances. Google used a standardized benchmark test called “Random sampling circuit” to prove that its computer could carry out these kinds of calculations much faster than a classical computer.

The other mind-bending principle that makes quantum computers work is “entanglement,” or the ability of two qubits to be linked together so that their states are interconnected. While nobody knows exactly why entanglement happens — it was theorized by Albert Einstein, who called it “spooky action at a distance” — it just works.

In part because our understanding of quantum physics is limited, qubits are extremely difficult to control, and thus prone to making errors. Very subtle interruptions, like cosmic rays passing through earth, can knock them off course.

Errors aren’t the end of the world for computers. Classical computers make errors, too, and a certain number of bits are devoted to fixing those errors.

But correcting errors in quantum computers is more difficult. In one of the unexplainable mysteries of quantum mechanics, the act of observing a qubit “collapses” its state, essentially turning it back into a classical bit.

To retain the properties that make qubits so powerful — superposition and entanglement — they must be observed indirectly by other qubits.

The problem has always been that, as quantum computers got larger, the error rates went up. That meant that, as quantum computers scaled up, dealing with the errors would be untenable.

Yet without getting much, much, larger, quantum computers won’t be able to live up to their world-changing promise, beyond proof of concept equations meant to show the theoretical value of the devices and some science experiments.

But the researchers at Google’s Quantum AI division in Santa Barbara say they’ve found a method of error correction that exponentially reduces the number of errors as the number of qubits goes up.

The discovery represents a major milestone in the field of quantum computing, but there is still a long way to go before the computers can scale up.

Scientists believe they will one day be able to make major breakthroughs in materials sciences and biology by conducting massive calculations at the molecular and atomic level that would be impractical for classical computers to tackle. But their usefulness may go well beyond the use cases that have been identified today.