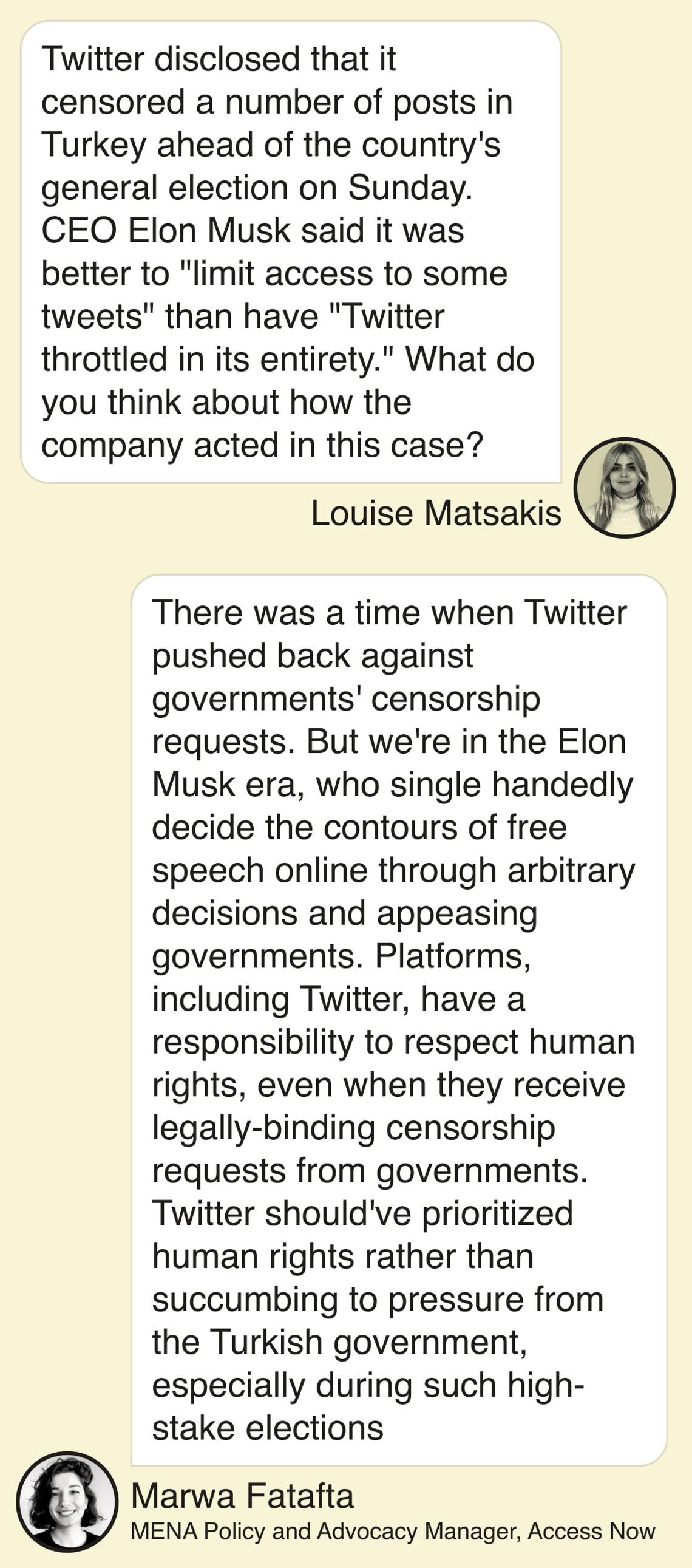

THE SCOOP OpenAI told a leading company that provides data to Washington lobbyists and policy advocates that it can’t advertise using ChatGPT for politics. The booming Silicon Valley startup took action after the Washington, D.C. company, FiscalNote, touted in a press release that it would use ChatGPT to help boost productivity in “the multi-billion dollar lobbying and advocacy industry” and “enhance political participation.” Afterward, those lines disappeared from FiscalNote’s press release and were replaced by an editor’s note explaining ChatGPT could be used solely for “grassroots advocacy campaigns.” A FiscalNote spokesperson told Semafor it never intended to violate OpenAI’s rules, and that it deleted that text from its press release to “ensure clarity.” KNOW MORE This is the first known instance of OpenAI policing how the use of its technology is advertised. The company last updated its policies in March, which now ban people from using its models for, among other things, building products for political campaigning or lobbying, payday lending, unproven dietary supplements, dating apps, and “high risk government decision-making,” such as “migration and asylum.” OpenAI told Semafor that it uses a number of different methods to monitor and police when those policies are being violated. In the case of politics specifically, the company revealed it’s working on building a machine learning classifier that will flag when ChatGPT is asked to generate large volumes of text that appear related to electoral campaigns or lobbying. The incident between OpenAI and FiscalNote stemmed from how the latter described two intertwined products. One is “VoterVoice,” which uses AI to help well-funded Washington interests to send hundreds of millions of targeted messages to elected officials in support or opposition of legislation. OpenAI did not express objections to another product, SmartCheck, which uses ChatGPT to coach grassroots advocacy groups on how to improve their email campaigns by assessing things like subject lines, the number of links they include, and other factors.  Reuters/Florence Lo Reuters/Florence LoLOUISE’S VIEW ChatGPT isn’t a social media platform, but OpenAI will have to make many of the same calls that sites like YouTube and Meta have had to make about who can access their tools and under what circumstances. Regulators can push the company to be forthcoming about its decisions and when bad actors inevitably slip through the cracks. One major question is how well OpenAI will be able to enforce its policies in languages other than English, and whether it ends up being easier to abuse ChatGPT to manipulate elections in non-Western countries. And unlike most mainstream social media platforms, OpenAI does not yet publish regular transparency reports about how often it’s catching violators. But OpenAI does have one important advantage that will make it easier to police ChatGPT: It can build safeguards directly into the chatbot itself, which already refuses to respond to many queries that violate the company’s rules. ROOM FOR DISAGREEMENT Even if OpenAI catches the majority of bad actors abusing its technology, other companies will be happy to use the same kind of AI to help clients influence politics, especially because it appears to work. When researchers from Cornell University sent over 30,000 human-written and AI-generated emails to more than 7,000 state legislators on hot-button issues like gun control and reproductive rights, they found that the lawmakers were “only slightly less likely” to respond to the automated messages. THE VIEW FROM CHINA In the first known case of its kind, Chinese police arrested a man earlier this month for reportedly using ChatGPT to fabricate a series of news articles about a train accident. China recently proposed new rules for AI chatbots and implemented regulations governing the use of “deep synthesis” technologies, like deepfakes. But as the newsletter ChinaTalk notes, the man in northwest Gansu province was charged for “picking quarrels and provoking trouble,” a classic catch-all offense for activities the Chinese Communist Party doesn’t like. NOTABLE |